| A Mandarin Speech

Synthesis System Combining HMM Spectrum Model, ANN

Prosody Model, and HNM Signal Model 結合 隱藏式馬可夫 頻譜模型, 類神經網路 韻律模型, 及HNM信號模型 之國語(華語)語音合成系統 |

|

| Hung-Yan Gu (古鴻炎),

Ming-Yen Lai (賴名彥), and Sung-Fung

Tsai (蔡松峰) e-mail: guhy@mail.ntust.edu.tw |

2010,

2013 |

| (a)

Support timbre transformation

(e.g. female => male) and changeable

speech-rate. (b) Support synchronous playing of a syllable-pronunciation symbol and its synthetic speech. (c) 375 (or 1,176) spoken sentences are used to train syllable or initial/final HMMs. (HMM training method: segmental k-means; for /pien/, the initial is /p/ and the final is /ien/) (d) Spectral parameters: 40 discrete cepstrum coefficients and their differentials per frame. (e) ANN prosody models are trained with 375 sentences. (f) Papers for reference: model combination(2010), f0 generation(2011), HNM signal synthesis(2009), timbre transformation(2012) Tt |

|

Synthetic

speech examples |

|

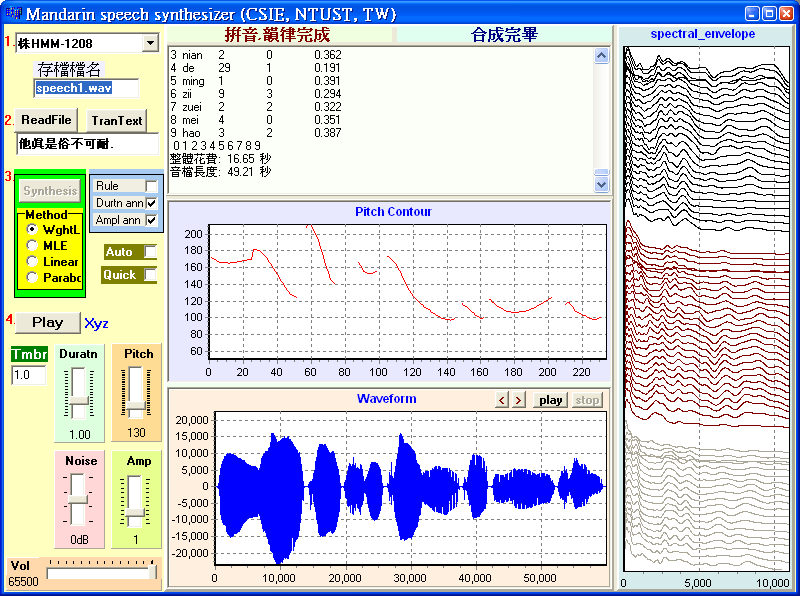

Program screen |

|

Recording of program execution |

|

Master Thesis Abstract |

| Synthetic

speech |

Example training utterances | ||

| Init. & Finl. HMM |

Syllable HMM |

||

|

|

using 1,176 sentences uttered by a male to train HMMs. |

, ,  |

|

|

using only 375 sentences uttered by

the same male to train

HMMs. |

|

|

|

using 375 sentences uttered by female A to train HMMs. |

|

|

|

using 375 sentences uttered by female B to train HMMs. |

, ,  |

|

using 801 sentences uttered by female B to train HMMs. |

||

| text content of Text A |

因為不知道你的名子, 就讓我叫你白花樹, 春天, 當你的花朵盛開時, 就像點亮了滿樹白蠟燭. 春天因你而閃閃發光, 笑臉因你而更加明媚, 微風因你而飄送芬芳, 日子就像緩緩的溪水. 白花樹變成了一幅畫, 引來了那麼多賞花人. 白花樹從此有了一個家, 他的根連著無數人的心. 名子也許並不那麼重要, 讓人懷念的名子最美好. |

||

| Synthetic

speech |

||

| Init. & Finl. HMM |

Syllable HMM |

|

|

|

|

|

|

using only 375 sentences uttered by the same male to train HMMs. |

|

|

using 375 sentences uttered by female A to train HMMs. |

|

|

using 375 sentences uttered by female B to train HMMs. |

|

using 801 sentences uttered by female B to train HMMs. |

|

| text content of Text B |

大清早, 公園裡的池塘, 是沒有皺紋的鏡子. 鏡子的一角, 映著打拳的太太, 鏡子的另一角, 映著讀報的老人, 鏡子的中間, 映著開白花的雲朵. 幾條姿態優雅的錦鯉, 游在不生皺紋的鏡子裡, 從打拳的身上, 游過去, 從讀報的紙上游過去, 從雲朵的花瓣上, 游過去. |

|

| Synthetic speech | ||

| Init.

& Finl. HMM |

Syllable HMM |

|

|

|

|

|

|

using only 375 sentences uttered by the same male to train HMMs. |

|

using 375 sentences uttered by female A to train HMMs. |

|

|

|

using 375 sentences uttered by female B to train HMMs. |

|

using 801 sentences uttered by female B to train HMMs. |

|

| text content of Text C |

天下雜誌民意調查顯示, 六成一的民眾擔心經濟傾中, 七成五的年輕人自認是台灣人. 天下雜誌昨天公布的最新民調指出, 高達六成一的民眾擔心台灣經濟過度依賴中國大陸. 天下民調的另一個數據也值得政府省思, 百分之六十二的受訪者認為自己是台灣人, 自認既是台灣人也是中國人的有百分之二十二, 自認是中國人的僅百分之八, 其中十八到二十九歲的年輕族群, 認為自己是台灣人的, 更高達百分之七十五, 是同類調查的新高. 天下雜誌對此, 引述亞太和平研究基金會董事長趙春山的解讀說, 以往選擇是中國人也是台灣人的比率最高, 牽涉的是對中華文化的認同及漢民族血濃於水的感情, 如今在國人的印象中, 談到中國代表的就是中華人民共和國, 因此更強化了台灣人對這片土地的認同感. |

|

Program

Screen

Program

Screen |

Recording

of Program Execution

Recording

of Program Execution| 1)

male voice |

3)

timbre transformation |

|

|

|

|

| 2) female voice | 4) changable speech-rate | |

|

faster:  slower:

|

Master

Thesis Abstract of M. Y. Lai (2009)

Master

Thesis Abstract of M. Y. Lai (2009) Training

Sentences

Training

Sentences| 375

sentences |

801

sentences |